What is the application direction of complex programmable logic devices

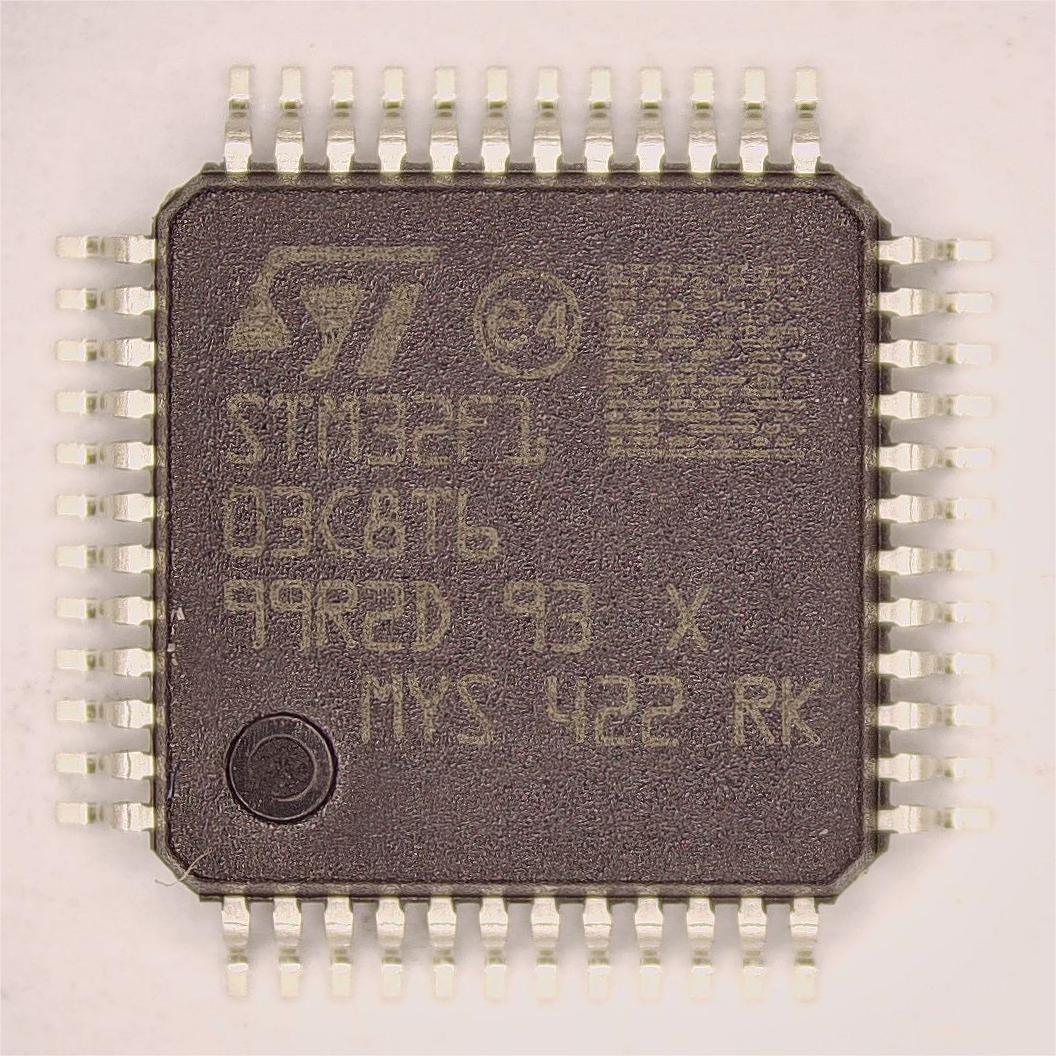

Complex programmable logic device (CPLD) was developed in the mid-1980s with the continuous improvement of semiconductor component technology and the continuous improvement of user requirements for device integration.

There are many manufacturers of complex programmable logic devices (CPLD), with various varieties and structures, but most of them adopt the following two structures. One is CPLD based on product term. The logic unit of this CPLD follows the product term logic unit structure of simple PLD (pal, gal, etc.). At present, most CPLDs belong to this type.

The logic block in CPLD is similar to a small-scale PLD. Usually, a logic block contains 4 ~ 20 macro units, and each macro unit is generally composed of product term array, product term allocation and programmable registers.

So, what are the application scenarios of complex programmable logic devices?

The emergence of reconfigurable PLD (programmable logic device) based on SRAM (static random access memory) has created conditions for system designers to dynamically change the logic function of PLD in operating circuits.

PLD uses SRAM units to store configuration data. These configuration data determine the interconnection relationship and logic function within the PLD. Changing these data also changes the logic function of the device.

Since the data of SRAM is volatile, these data must be stored in non-volatile memory such as EPROM, EEPROM or Flash ROM other than PLD devices, so that the system can download them to the SRAM unit of PLD at an appropriate time, so as to realize in circuit Reconfigurability (ICR).